Abstract:

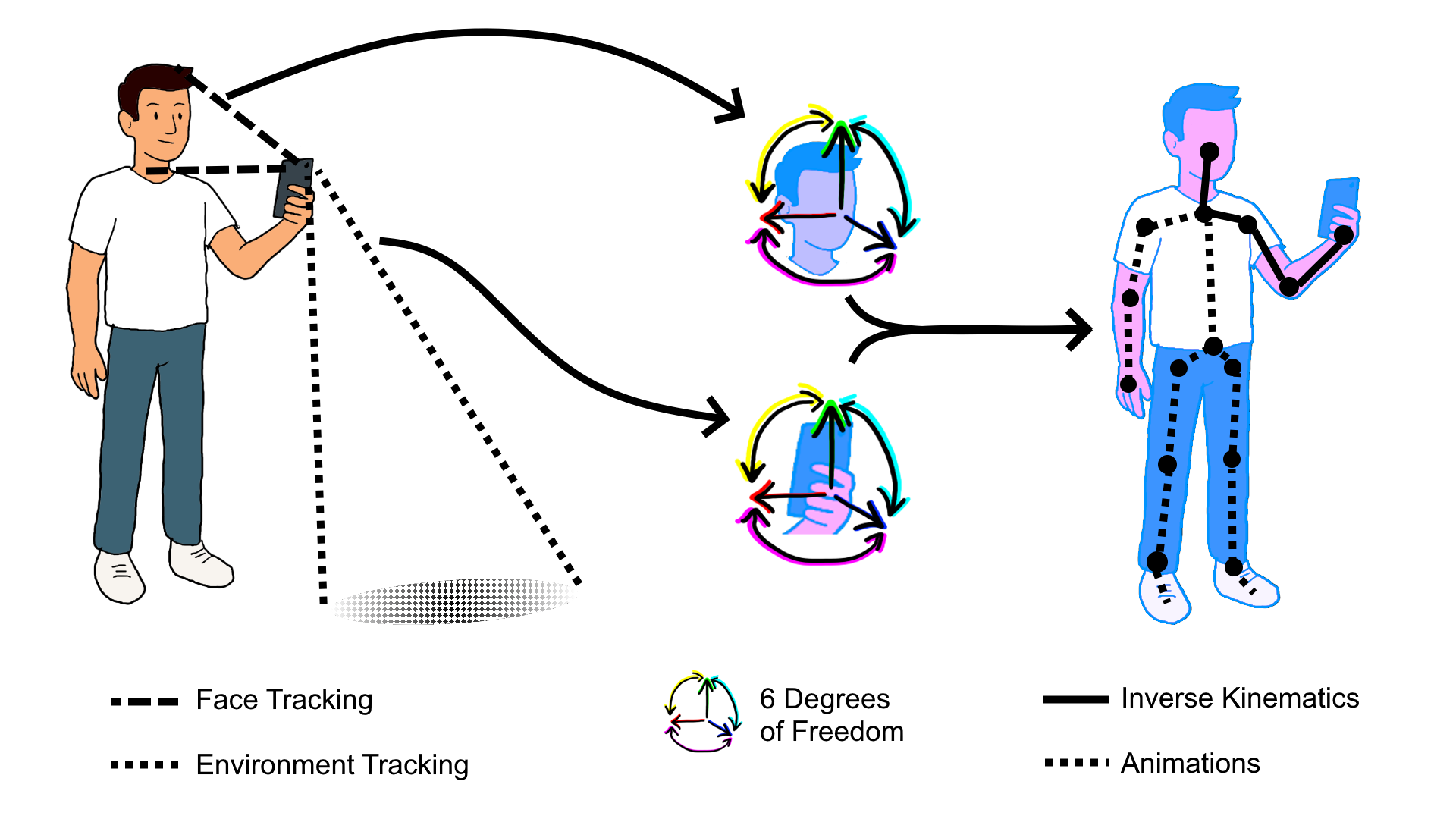

Smartphone Augmented Reality (AR) has already introduced numerous social applications. However, extending smartphone AR to support social experiences akin to VRChat or AltspaceVR requires robust user tracking to animate avatars accurately. While Virtual Reality (VR) benefits from devices like hand-tracking systems, controllers, and Head-mounted displays (HMDs) for precise avatar tracking, smartphone AR is limited to back and front cameras alongside inertial measurement units (IMUs). Previous work has explored animating smartphone users’ avatars using singlepoint Inverse Kinematics (IK) derived from smartphone tracking data. In this paper, we propose an enhanced approach that combines face tracking with single-point IK to improve avatar animation. Our system leverages ARKit to track hand and face positions and orientations to estimate user poses through IK. We evaluate our system’s performance against a commercial motion tracking system and traditional single-point IK in terms of tracking accuracy, user embodiment, and user experience. Our findings suggests that our dual-point IK (DPIK) method demonstrated significantly lower mean tracking errors and more consistent performance across joints compared to traditional single-point IK. Although statistical differences in embodiment measures were not significant, users reported a stronger sense of control and agency, particularly in head-tracked movements. Participants also favored DPIK in terms of both Pragmatic and Hedonic qualities, highlighting its potential for improving avatar representation in social AR experiences.

Published in: 2025 IEEE International Symposium on Mixed and Augmented Reality (ISMAR)